Imagine a hypothetical “Dear Abby” letter to the science community: “I am the principal investigator and corresponding author on a paper detailing research findings in our lab. A leading investigator at another university contacted me and states that she cannot duplicate our results. I re-ran the samples analyzed by my post-doc and I couldn’t either. What do I do? Sincerely, Panicked P.I.”

This is a problem within the scientific community. It has even been called a “reproducibility crisis.” According to Thomas Insel, the former director of the National Institute of Mental Health, nearly 80 percent of science from academic labs, even science published in the best journals, cannot be replicated.

“Our lack of a precise vocabulary — in particular the fact that we don’t have a word for ‘you didn’t tell me what you did in sufficient detail for me to check it’ — contributes to the crisis of scientific reproducibility,” writes Philip B. Stark, professor in the Department of Statistics at the University of California, Berkeley.

Rigor + Transparency = Reproducibility

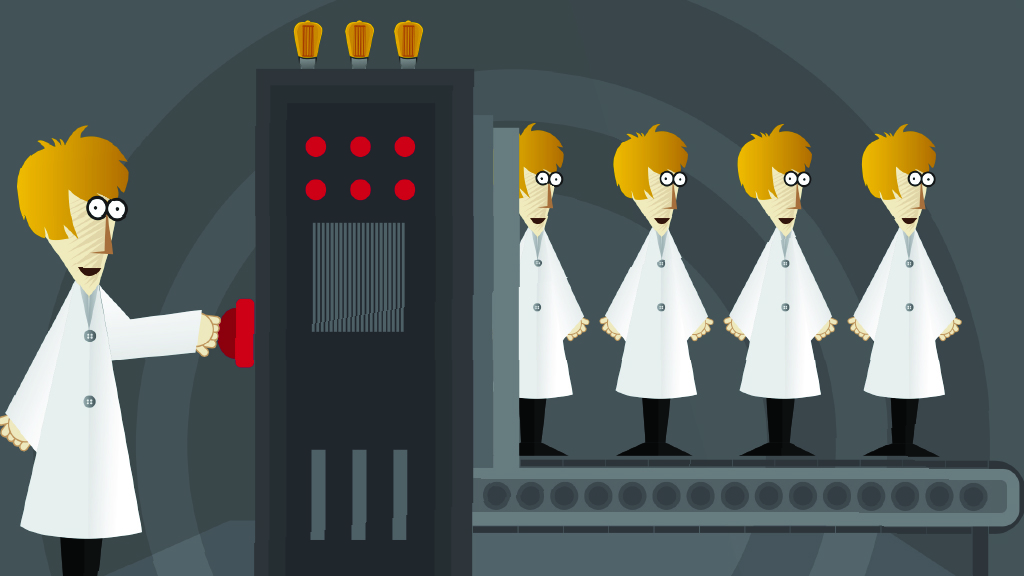

It’s a pretty straightforward equation. But why is it so hard to ensure rigor and transparency? One answer is: a PI may be ignoring elements such as randomization, blinding and the effect of sex differences within subjects. Or perhaps scientists use ad hoc choices or a “secret sauce” to make their experiments work. By not providing these to other researchers – to maintain a competitive edge for themselves – the experiments cannot be replicated.

Also, rigor may be compromised when researchers overemphasize “big picture” findings. Taking a random set of data and interpreting them as interesting trends is also known as the “Texas Sharpshooter” cognitive fallacy. Imagine a shooter aiming at a barn. Only later does he draw a target around a cluster of points that were hit. He was only aiming for the barn, but outsiders who come upon the scene may believe he meant to hit the target.

So, what is the key to avoiding committing such a fallacy? “Investigators should ensure that specific hypotheses, variables and analytical methods are established prior to the initiation of the research and maintained throughout, avoiding the temptation to retrofit a more interesting hypothesis to data that has already been collected,” posits Kirstin Holzschuh, executive director of the Research Integrity and Oversight Office at UH. “Should unexpected similarities or clusters arise, as they sometimes do, these presumed relationships must then be tested independently with new data in a study designed to examine their validity prior to sharing the relationship as conclusive.”

Publish This

Recently, many highly regarded journals united in their effort to support reproducibility: Science and Nature were most notably at the forefront of this endeavor. Editorial methods began to be employed, such as providing more space for scientists to explain their research fully, examining statistics more diligently and allowing researchers to include their raw data.

While there is a mindset across the scientific community that publishing negative findings is detrimental, maybe reproducibility is not always the necessary outcome. “When scientists cannot confirm the results from a published study, to some it is an indication of a problem, and to others, it is a natural part of the scientific process that can lead to new discoveries,” said the authors of Reproducibility and Replicability in Science (2019).

Dear Panicked P.I.

Taking all this into account, what would Dear Abby’s answer be to our unhappy, flailing researcher? Always have your post-docs and fellow researchers record everything for transparency’s sake. This raw data may be published one day, so be overly diligent. There are some that believe that the Internet of Things will help scientists curate, store and make available their digital findings with a repository that is openly accessible.

But before that – maintain a rigorous scientific process in all of your clinical trials. If a finding can’t be replicated, perhaps there’s an even greater question looming…and you, Panicked, may be the first to answer it.